Table of Contents

what are lLMs?

LLMs, which stands for Large Language Models, are AI systems that have been trained on a huge amount of language data. This data can be as large as billions or trillions of words and sentences. These models are created for different language-related tasks, like extracting information and having conversations in natural language. Some well-known examples of LLMs are ChatGPT, Llama 2, and Palm 2.

Challenges of Working with Large Language Models

Working with large language models (LLMs) presents several significant challenges. These challenges include:

1. Data Requirements

LLMs necessitate a vast amount of data for training. The training data often consists of billions or even trillions of tokens. Acquiring and processing such a massive volume of data can be a complex and resource-intensive task.

2. Computational Resources

Training and utilizing LLMs demand substantial computational power. The computational requirements increase with the size and complexity of the model. High-performance hardware, such as powerful GPUs or specialized cloud infrastructure, is typically necessary to handle the intense computational demands.

3. Expertise in Prompt Engineering

Effectively utilizing LLMs requires expertise in prompt engineering. Crafting appropriate prompts or inputs for the model to generate desired outputs can be challenging. Fine-tuning the model and optimizing prompt design often requires deep understanding and expertise in natural language processing.

Addressing these challenges is crucial for researchers, developers, and practitioners working with large language models. By overcoming these hurdles, the potential of LLMs can be harnessed for various applications and advancements in natural language understanding and generation.

4. Bias

It’s important to mention that LLMs can inherit biases from the data they are trained on. This can lead to biased outputs, and it’s crucial to be aware of this potential limitation.

5. Explainability and Transparency

LLMs can be complex and their decision-making processes can be opaque. Highlighting the ongoing research and development in improving the explainability and transparency of these models would provide a more comprehensive picture.

What is LLMOps?

LLMOps, short for Large Language Model Operations, refers to the practices and tools used to make large language models work effectively in real-world applications. In simpler terms, LLMOps focuses on how to build, deploy, and maintain large language models.

When you use large language models in real-world situations, LLMOps helps make sure they are safe for users, don’t have any issues, and perform well within acceptable timeframes and costs. LLMOps aims to solve these challenges and provide solutions to optimize the performance and reliability of large language models.

By using LLMOps, developers can make the process of building and deploying large language models more efficient, saving time and money. It also helps improve the performance and reliability of the models and makes it easier to manage, scale, and maintain them effectively.

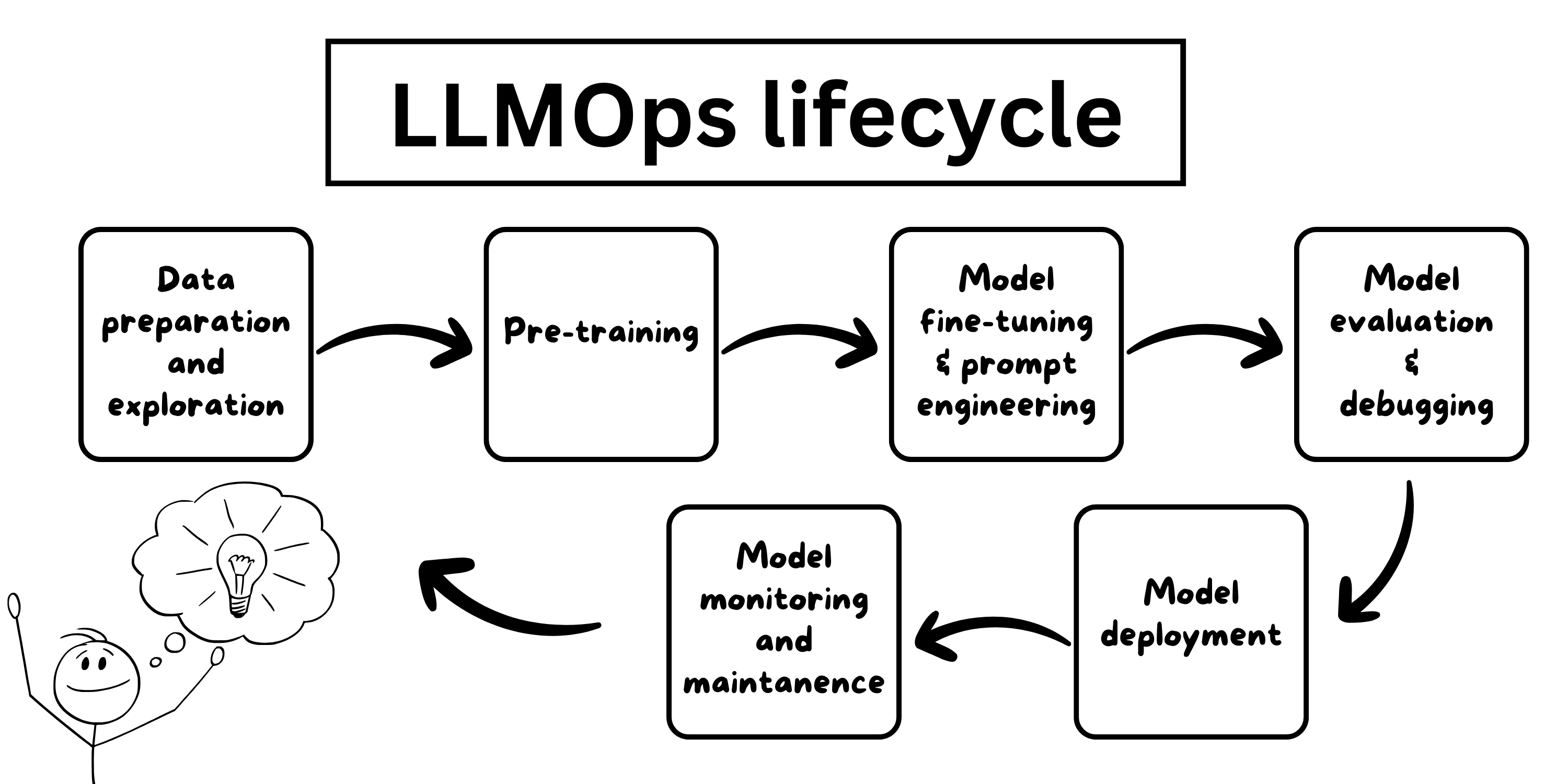

LLMOps lifecycle

The LLMOps lifecycle refers to the stages that a language model typically goes through in its development and deployment process. These stages are as follows:

- Data preparation and exploration: This stage involves preparing, exploring, and cleaning the data that will be used to train the language model. Similar to traditional machine learning, it is important to ensure that the data is of high quality and suitable for training the model.

- Pre-training: Pre-training is an optional step in the LLMOps lifecycle. When building with large language models, it is common to use models that have already been pre-trained on a large corpus of text. Pre-training involves training a language model on a large dataset to learn general language patterns and features.

- Model fine-tuning and prompt engineering: In this stage, the language model’s performance is improved for a specific task. This can be done by fine-tuning the pre-trained model using task-specific data. Additionally, prompt engineering involves designing and optimizing the prompts or queries given to the model to elicit desired responses.

- Model evaluation and debugging: Once a model is trained and fine-tuned, it needs to be evaluated to assess its quality. Different evaluation tactics and metrics can be used to measure the performance of the language model. Debugging involves identifying and fixing any issues or errors that may arise during the evaluation process.

- Model deployment: Deploying a language model to production used to be a challenging task, but now there are many solutions available that make it easier. This stage involves making the model accessible and operational for practical use. It often includes integrating the model into an application or system that can take user inputs and generate appropriate responses.

- Model monitoring and maintenance: Once the model is deployed, ongoing monitoring and maintenance are essential. With a language model, it is important to monitor user interactions, track the model’s performance consistency, and collect user feedback to improve the model over time. Additionally, traditional MLOps concerns like model drift (when the model’s performance deteriorates over time) and latency (response time) need to be monitored and managed.

Comparison between MLOps and LLMOps

MLOps and LLMOps both refer to operational practices in the field of machine learning, but they have different focuses and objectives. Here’s a comparison between the two:

- Definition and Focus:

- MLOps (Machine Learning Operations): MLOps is an approach that combines machine learning, software engineering, and DevOps practices to streamline the deployment, management, and maintenance of machine learning models in production. It focuses on automating the end-to-end lifecycle of machine learning systems, including data preparation, model training, deployment, monitoring, and retraining.

- LLMOps (Language Model Lifecycle Operations): LLMOps refers specifically to operational practices related to language models, such as ChatGPT. It involves managing the lifecycle of language models, including training, fine-tuning, deployment, monitoring, and version control. LLMOps aims to ensure the reliability, performance, and safety of language models throughout their lifecycle.

- Applications:

- MLOps: MLOps is applicable to a wide range of machine learning projects and models. It is commonly used in various domains, such as computer vision, natural language processing, recommendation systems, and predictive analytics.

- LLMOps: LLMOps is specifically focused on language models and their applications, which include natural language processing, dialogue systems, language translation, text generation, and conversational agents.

- Challenges and Considerations:

- MLOps: MLOps involves challenges related to data management, model versioning, reproducibility, scalability, infrastructure management, and continuous integration/continuous deployment (CI/CD). It requires collaboration between data scientists, machine learning engineers, software developers, and operations teams.

- LLMOps: LLMOps shares some challenges with MLOps, such as data management and versioning. However, it also has specific considerations related to language model biases, ethical considerations, content moderation, and fine-tuning approaches to mitigate potential risks associated with language model behavior.

- Tools and Technologies:

- MLOps: MLOps relies on a variety of tools and technologies, such as version control systems (e.g., Git), containerization (e.g., Docker), orchestration frameworks (e.g., Kubernetes), continuous integration/continuous deployment (CI/CD) platforms, monitoring systems, and automation tools.

- LLMOps: LLMOps utilizes similar tools and technologies as MLOps, but with a focus on language model-specific tools for training, fine-tuning, and deployment. It may also involve specialized libraries and frameworks for natural language processing and text generation.

In summary, while MLOps is a broader approach that encompasses managing the entire lifecycle of machine learning models, LLMOps specifically focuses on the operational practices related to language models. LLMOps addresses the unique challenges and considerations associated with language models, ensuring their reliability, safety, and performance.

Conclusion

In conclusion, MLOps and LLMOps are operational practices in the field of machine learning, but they have different focuses and objectives. MLOps is a broader approach that encompasses managing the lifecycle of various machine learning models, while LLMOps specifically addresses the operational practices related to language models. MLOps aims to streamline the deployment, management, and maintenance of machine learning systems, while LLMOps focuses on ensuring the reliability, safety, and performance of language models. Both approaches involve challenges and considerations specific to their respective domains and rely on tools and technologies to automate and optimize operational processes. Learn how to Install Kubernetes Manual