Table of Contents

Artificial Intelligence (AI) is a must-learn topic today because its use is expanding rapidly across all fields. As AI becomes more integrated into various industries, understanding its core concepts is essential. Without this knowledge, you might fall behind and end up spending much more time on tasks that could be streamlined with AI, which could impact your career.

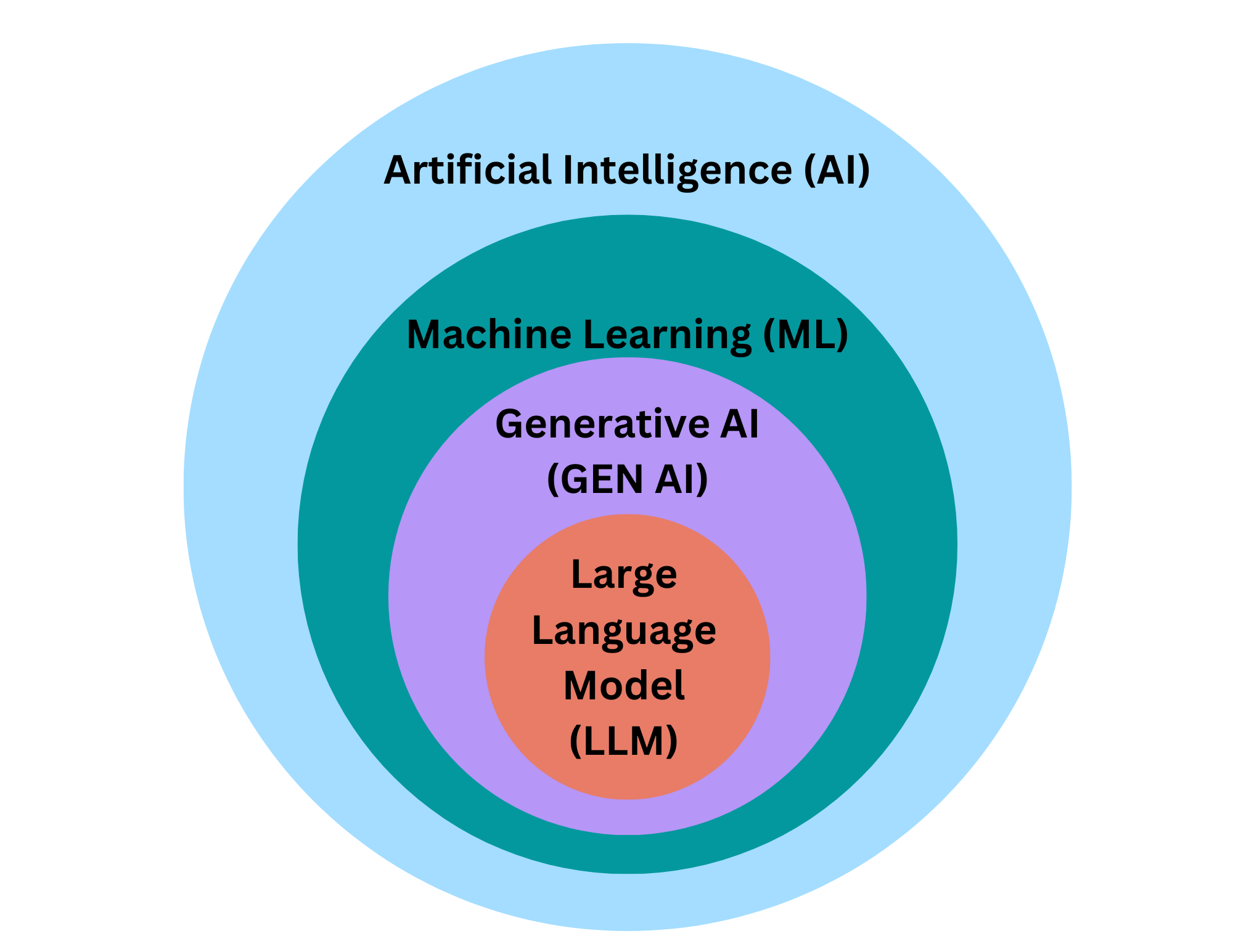

In this article, we’ll explore the connections between key AI concepts like Machine Learning (ML), Generative AI(GEN AI), and Large Language Models(LLM). By seeing how these ideas build on each other, you’ll get a clearer picture of how AI works and why it matters. Understanding these connections is important because it helps us not just understand the technology itself but also how it can be used effectively in real-world situations. Additionally, we’ll cover concepts like MLOps and LLMOps, which are essential for managing and optimizing machine learning and language models in production. This knowledge will help you make the most of AI and stay ahead in an increasingly tech-driven world.

What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) refers to the field of computer science focused on creating systems that can perform tasks requiring human-like intelligence. This includes learning from experience, understanding natural language, recognizing patterns, and making decisions. Essentially, AI encompasses all methods and technologies used to replicate and simulate human intelligence in machines.

Historical Context

The evolution of Artificial Intelligence (AI) began in the 1950s with pioneers like Alan Turing and John McCarthy, who laid the groundwork for the field. Early AI systems were rule-based and focused on simple tasks. In the 1980s, expert systems emerged, imitating human decision-making. The 2000s saw the rise of machine learning, allowing AI to improve from data. Today, advancements in deep learning and neural networks have made AI more powerful and flexible than ever.

Artificial Intelligence (AI) Applications

Artificial Intelligence (AI) is deeply integrated into our daily lives, often in ways we might not immediately recognize. For instance:

- Digital Assistants: Tools like Siri and Google Assistant help with voice commands and everyday tasks.

- GPS Guidance: AI optimizes routes and provides real-time traffic updates for more efficient travel.

- Autonomous Vehicles: Tesla‘s self-driving cars use AI for navigation and safety.

- Generative AI Tools: OpenAI’s ChatGPT generates human-like text for tasks like writing and customer support.

These examples show how AI simplifies and enhances many aspects of our daily routines.

What is Machine Learning (ML)?

Machine Learning (ML) is a part of Artificial Intelligence (AI) that develops algorithms allowing machines to learn from data and make predictions or decisions. ML focuses on creating processes that don’t need explicit programming for every scenario. Instead, these processes adapt based on the data they are trained on. This adaptable process is represented mathematically by a Machine Learning model. Unlike general AI, which aims to create systems that can perform any task a human can, ML specifically works on improving model performance over time through continuous learning from data.

Core Techniques in Machine Learning (ML)

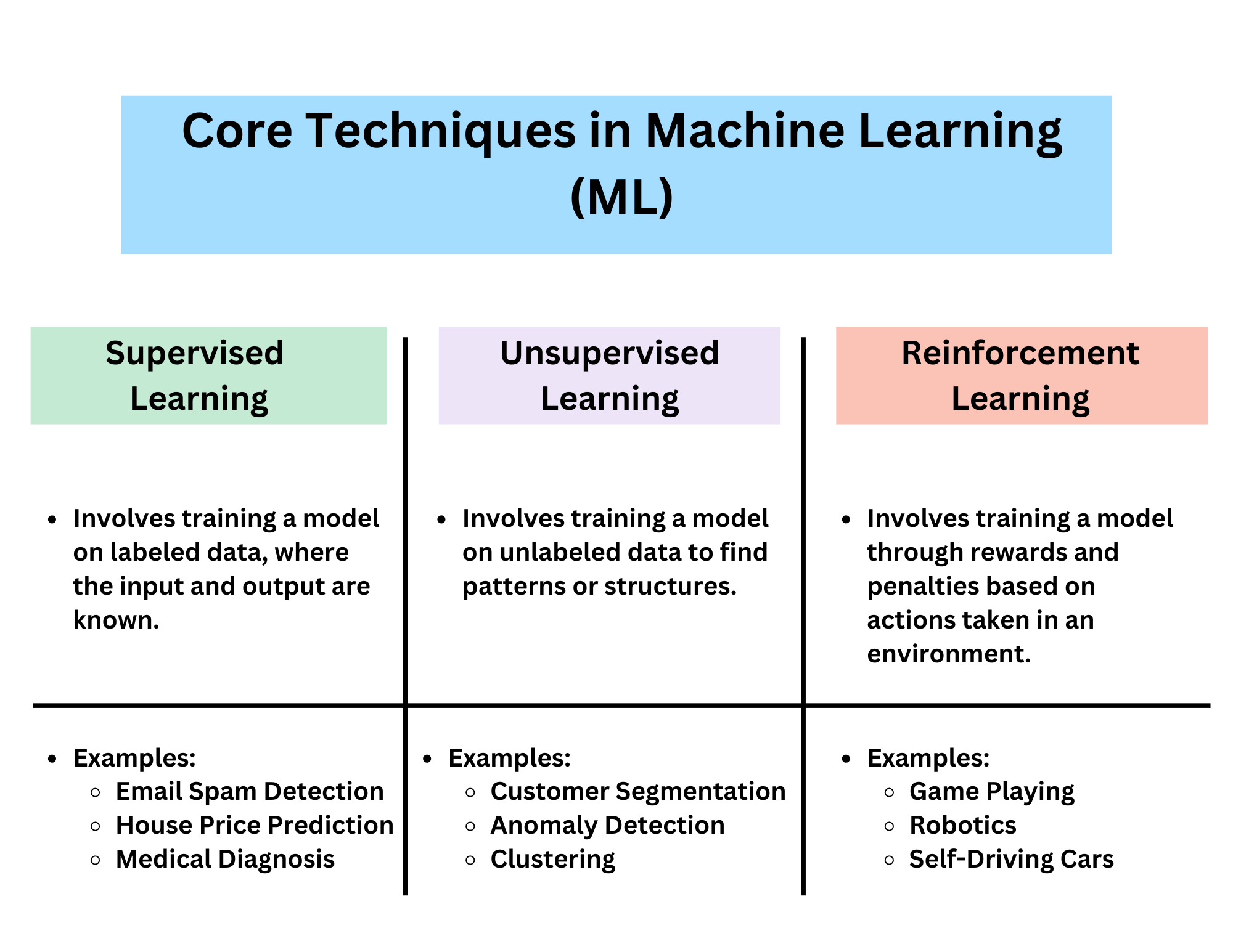

Understanding the core techniques of Machine Learning (ML) is essential for utilizing its capabilities. These methods enable machines to learn from data and make smart decisions or predictions. Here’s a brief overview of the key techniques used in ML:

- Supervised Learning: Involves training a model on labeled data, where the input and output are known. For example, email spam detection uses supervised learning to identify spam messages based on pre-labeled examples.

- Unsupervised Learning: Involves training a model on data without labeled responses. It helps find hidden patterns or groupings in data. An example is customer segmentation, where businesses use unsupervised learning to group customers based on purchasing behavior.

- Reinforcement Learning: Focuses on training models to make sequences of decisions by rewarding desired behaviors and penalizing undesired ones. An example is optimizing traffic light timings. AI systems learn to adjust light schedules to minimize congestion and improve traffic flow based on real-time traffic patterns and feedback.

These techniques and examples illustrate how ML is applied to solve specific problems and make data-driven decisions across various domains.

What is MLOps?

MLOps is an approach that combines machine learning with DevOps to deploy, monitor, and manage ML models in production. It ensures that machine learning models are effectively integrated into business operations, remain performant over time, and adapt to changing data and conditions.

Examples of MLOps

- Model Deployment: Automating the process of moving models from development to production.

- Monitoring: Continuously tracking model performance to ensure it stays accurate over time.

- Version Control: Managing different versions of models to track changes and improvements.

What is Generative AI (Gen AI)?

Generative AI (Gen AI) is a type of technology designed to create new and original content, such as text, images, or music. Unlike traditional AI which primarily analyzes and interprets existing data, Generative AI is a subset of ML that involves creating new data similar to an existing data set.

Examples of Generative AI (Gen AI)

- Text Generation: GPT-3 and Gemini generate human-like text, making it useful for chatbots, content creation, and drafting written material.

- Image Generation: DALL-E creates detailed images based on text descriptions, turning written prompts into visual art.

- Music Generation: AI tools can compose original music pieces, generating melodies and harmonies without human input.

Generative AI showcases how technology can go beyond analysis to innovate and create, offering new possibilities in various fields.

The information provided is correct. Here’s a more organized and simplified version:

Key Patterns for Building Generative AI Applications

Building Generative AI applications can be approached in different ways. Here are two key patterns to consider:

- Create and Train Your Own Gen AI Model:

- Frameworks: Use neural network frameworks like PyTorch or TensorFlow.

- Time-Intensive: This process is usually time-consuming.

- Steps Involved: Iterate on model architecture and hyperparameters, and collect and prepare large amounts of training data.

- Use and Improve Pre-Trained Models:

- Pre-Trained Models: Often leverage pre-trained models, commonly Large Language Models (LLMs).

- Fine-Tuning: Improve these models by fine-tuning them for specific tasks or domains

Key Patterns for Improving Responses with Pre-Trained LLMs

To enhance the effectiveness of pre-trained Large Language Models (LLMs), several key strategies can be employed:

- Prompt Engineering:

- Crafting specific and detailed prompts to guide the model’s responses. For example, instead of asking “What is AI?” you might ask “Can you explain what artificial intelligence (AI) is and give examples of its applications?” This helps the model generate more accurate and relevant answers.

- Retrieval Augmented Generation (RAG):

- Combining LLMs with a system that fetches information from external sources. For instance, if the model needs to generate a report about recent events, RAG can pull in the latest news articles to ensure the information is current and precise.

- Fine-Tuning of Pre-Trained Models:

- Adjusting a pre-trained model with specialized datasets. For example, if you need an AI to understand medical terminology better, you can fine-tune it using medical texts. This makes the model’s responses more accurate and relevant to that field

These techniques help tailor responses to be more accurate, up-to-date, and contextually appropriate, making your AI applications more effective and useful.

What is a Large Language Model (LLM)?

Large Language Models (LLMs) are AI systems designed to understand and generate human-like text. Trained on extensive text data, these models learn language patterns and can perform tasks like writing, answering questions, and creating content. LLMs use machine learning techniques, particularly deep learning, to improve their text generation capabilities. They are a key example of Generative AI, as they create a new text based on learned patterns and given prompts, demonstrating how AI can produce coherent and relevant content.

Examples of Large Language Models (LLMs)

The most common type of Gen AI model. These models are trained on vast amounts of text to be able to generate natural language after being given prompts like ChatGPT, Gemini, Claude ab:

- GPT-4 (OpenAI): Creates coherent and relevant text for various applications, including content generation and conversational AI.

- Claude (Anthropic): Focuses on ethical and safe text generation, aiming for accurate and contextually appropriate outputs.

- LaMDA (Google): Specializes in improving conversational AI for more natural and engaging dialogues.

What is LLMOps?

LLMSOps is a specialized approach for managing Large Language Models (LLMs). It focuses on the deployment, monitoring, and maintenance of these models to ensure they function effectively in various applications. LLMSOps applies the general principles of MLOps—such as managing machine learning models’ lifecycle—to the specific challenges associated with LLMs. This includes addressing issues like scalability, efficiency, and ethical considerations tailored to the needs of LLMs.

It’s highly recommended to read Understand LLMOps: A Comprehensive Guide

Conclusion

AI is a broad field encompassing various intelligent systems, while ML is a subset focused on enabling machines to learn from data. Within ML, GenAI stands out by creating original content, and LLMs represent a specific application of GenAI with a focus on understanding and generating human-like text.

To effectively manage and deploy these advanced models, practices like MLOps and LLMOps are essential. MLOps combines ML with DevOps to streamline the deployment, monitoring, and maintenance of ML models, while LLMOps specifically addresses the challenges related to managing LLMs. Together, these practices ensure that AI technologies are not only cutting-edge but also practical, reliable, and scalable in real-world applications.